L’équipe SIG, Systèmes d’Informations Généralisés (« Information Systems »), est une équipe qui existe depuis la création de l’IRIT, et constitue l’une des plus grandes équipes du laboratoire avec 21 enseignants-chercheurs en poste dans 4 universités de la région Occitanie : Université Toulouse 1 Capitole, Université Toulouse 2 Jean Jaurès, Université Toulouse 3 Paul Sabatier, Université Jean François Champollion (Ecole ISIS, Castres). L’équipe compte aussi près d’une trentaine d’étudiants post-doctorants, doctorants ou stagiaires, et d’ingénieurs de recherche.

Les recherches développées par l’équipe SIG concernent la donnée (« data »), en particulier la gestion des données et le traitement des masses de données actuelles (« Big Data »). Ils visent à développer des méthodes, modèles, langages et outils qui permettent un accès simple et efficace à l’information pertinente pour permettre ou en améliorer l’usage, faciliter l’analyse et aider à la prise de décisions.

Nos travaux concernent une grande variété de collections de données : bases de données scientifiques et des entreprises (aéronautique, espace, énergie, biologie, santé…), le Web et les applications mobiles actuelles (user generated content), les données ouvertes (open data), les benchmarks scientifiques (CLEF, OAEI, SSB, TPC-H/DS, TREC…), les connaissances ou données sémantiques (ontologies), les capteurs et les objets connectés (IoT)…

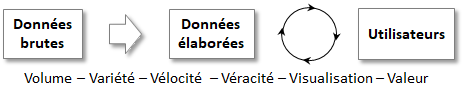

Les travaux de recherche de l’équipe SIG couvrent toute la chaîne de traitement de la donnée, allant des données brutes aux données élaborées accessibles pour les utilisateurs cherchant de l’information, souhaitant la visualiser et effectuer des analyses décisionnelles et prédictives.

Ces travaux s’articulent en 4 volets.

Intégration automatique de données hétérogènes

Les données disponibles de nos jours constituent des masses de données volumineuses, de structuration disparate (structurées, semi-structurées à non-structurées), largement distribuées et souvent très hétérogènes. Nos travaux portent sur les différentes facettes de l’hétérogénéité : hétérogénéité d’entités, hétérogénéité structurelle, hétérogénéité syntaxique et sémantique des éléments.

L’enjeu est de développer des méthodes et algorithmes permettant de retrouver de manière automatique les correspondances entre les éléments issus deux ou de multiples sources (« alignements holistiques ») de données et de connaissances. Les correspondances cherchées peuvent être simples 1:1 ou complexes 1:n (ou n:1) et n:m.

Gestion des bases données non-conventionnelles

Les systèmes de gestion de base de données modernes doivent de nos jours être capables de prendre en charge des grands volumes de données, caractérisées par une importante variété (données conventionnelles comme les bases relationnelles, documents structurés – XML, JSON –, collections de textes, ontologies de domaines…). Ils ne reposent plus sur un modèle uniformément structuré et standard (relationnel), mais reposent sur des systèmes de stockage centralisés (data warehouse, data lake) ou distribués basés sur des paradigmes non-conventionnels (orientés clé-valeur, orientés document, orientés colonne, orientés graphe). Ces systèmes non conventionnels sont aussi appelés noSQL (not only SQL).

Dans ce contexte multi-modèle, l’enjeu consiste à développer de nouvelles méthodes de conception intégrant des modèles de représentation des données clairement formalisés (concepts et formalismes), et les langages de manipulations associés. Chaque langage défini doit assurer la complétude d’un noyau algébrique fermé d’opérateurs élémentaires, assurant la couverture du modèle, garantissant la validité et la puissance du langage.

Données orientées-utilisateurs

La connaissance de l’utilisateur est essentielle dès que l’on souhaite développer des systèmes complexes, capables d’être plus efficaces, et éventuellement de s’adapter. Cette connaissance est le plus souvent basée sur la construction d’un profil utilisateur, défini comme un ensemble de données caractérisant l’usager, son contexte et l’usage.

Dans ce contexte, nos travaux portent sur la définition de profils utilisateurs contextuels (spatio-temporels, égocentrés) relatifs à un utilisateur ou un groupe d’utilisateurs. Nous exploitons ces profils pour développer des approches algorithmiques dans les systèmes de recommandation et de filtrage d’informations (diversité) ainsi que dans le contexte de l’analyse des réseaux sociaux (détection de communautés, de fraudes, d’influences, de sentiments).

Analyse, apprentissage et prédiction dans les données massives

L’avènement des Big Data révolutionne l’informatique. L’humanité produit de nos jours des masses de données gigantesques, au travers du réseau mondialisé Internet, des appareils mobiles et l’Internet des objets, mais également par des infrastructures scientifiques de d’observation et captation (satellites, accélérateurs de particules, séquenceurs d’ADN…). Selon une étude IDC pour EMC (The digital universe of opportunities) parue en avril 2014, le volume de données produites devrait être multiplié par 10 entre 2013 et 2020 (44 Zettabytes). De nouveaux algorithmes sont aujourd’hui développés au-dessus de clusters de machines rendant possible l’analyse, et la réalisation de simulations et de prédictions à partir de ces masses de données.

L’équipe SIG mène des recherches sur la paramétrisation d’algorithmes d’analyse et de fouille de données (data mining), d’apprentissage automatique (machine learning) et profond (deep learning). L’intelligence de la donnée est un enjeu de la science des données, qui dépend de l’efficacité des algorithmes et des méthodes d’analyse. Ces approches doivent être mise en place en garantissant la reproductibilité la plus large possible. Ceci est généralement difficile à satisfaire car les collections de données volumineuses et hétérogènes sont souvent de qualité variable, et réparties selon des distributions déséquilibrées ou éparses. Ces caractéristiques obligent des paramétrisations précises rendant les approches spécifiques à un sous ensemble réduit de données.